Eye/gaze tracking in video

Project description

Input: video sequence (preferably acquired from a webcam) of a user sitting in front of the computer; Assume some document (text, graphics, a medical image or a photo) is displayed and visualized

Goal: Detect the focus of attention and the modification of the region of interest of the user (note that we are ONLY interested in the regions of interest for the user, not on the trajectory of the eye itself!) Based on that, some regions of interest can be drawn on the image.

Tasks to do:

- develop an application able to detect and track the eye in video;

- identify the corresponding observed (focused) locations (areas) on the screen and the trajectory of the eye on the screen;

- mark the regions of interest and their evolution/change in time

Tools

OpenCV is a computer vision library originally developed by Intel. It is free for commercial and research use under the open source BSDlicense. The library is cross-platform, and runs on Windows, Mac OS X, Linux, PSP, VCRT (Real-Time OS on Smart camera) and other embedded devices.

Emgu CV is a cross platform .Net wrapper to the Intel OpenCV image-processing library. Allowing OpenCV functions to be called from .NET compatible languages such as C#, VB, VC++, IronPython etc.

C# is a multi-paradigm programming language encompassing functional, imperative, generic, object-oriented (class-based), and component-oriented programming disciplines. It was developed by Microsoft within the .NET initiative and later approved as a standard by Ecma (ECMA-334) and ISO (ISO/IEC 23270). C# is one of the programming languages designed for the Common Language Infrastructure.

MATLAB is a numerical computing environment and fourth generation programming language. Developed by The MathWorks, MATLAB allows matrix manipulation, plotting of functions and data, implementation of algorithms, creation of user interfaces, and interfacing with programs in other languages. Although it is numeric only, an optional toolbox uses the MuPAD symbolic engine, allowing access to computer algebra capabilities. An additional package, Simulink, adds graphical multidomain simulation and Model-Based Design for dynamic and embedded systems.

Implementation

We used Haar-like features for face detection. Haar-like features are digital image features used in object recognition.

Then we reduced the face region and split it to region of eyes.

To detect the region of eyes we used also Haar-like features. Then contrast enhancement on the detected eye region was applied.

Circular Hough transformation was applied for detection of the pupil. The Hough transform is a feature extraction technique. The classical Hough transform was concerned with the identification of lines in the image, but later the Hough transform has been extended to identifying positions of arbitrary shapes, most commonly circles or ellipses.

Calibration for gaze detection:

- Wait until the user sits in a position, where 80% of the frames detect the iris center and the corner also.

- Put circles in the center and the four extremities of the screen, and wait until at least 15 pupil and eye corners are detected in both region of eye.

- Calculate the average of eye corner and center coordinates in all the positions (center, topleft, topright…).

Results

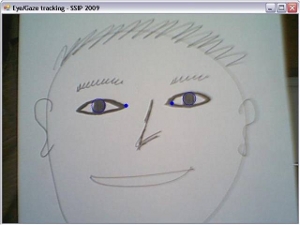

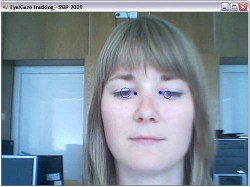

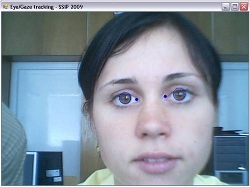

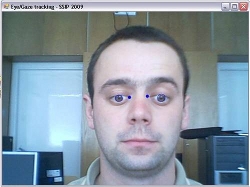

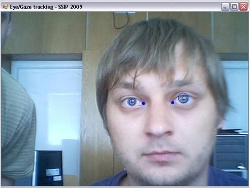

Here are some result pictures of eye detection with our software

|

|

|

|

| Variance (pixel^2) Test1 | Variance (pixel^2) Test2 | Variance (pixel^2) Test3 | Variance (pixel^2) Test4 | Variance (pixel^2) Test5 | |

|---|---|---|---|---|---|

| Left pupil | 437 | 309 | 292 | 300 | 314 |

| Left corner | 577 | 555 | 567 | 570 | 556 |

| Right pupil | 346 | 288 | 341 | 294 | 296 |

| Right corner | 256 | 293 | 274 | 178 | 220 |

Future development

- Imitating left and right mouse clicks with blink detection

- Recognition even when face is in different angle

- Expression detection for different focus regions

- Higher precision for full control for people with disabilities