Research Group on Visual Computation

- Home

- Projects

- TODER Project

- Demo Software

- Open positions

- COSCH Training School 2015

- Publications

- Intranet

Calibration of Omnidirectional, Perspective and Lidar Camera Systems | ||

|

|

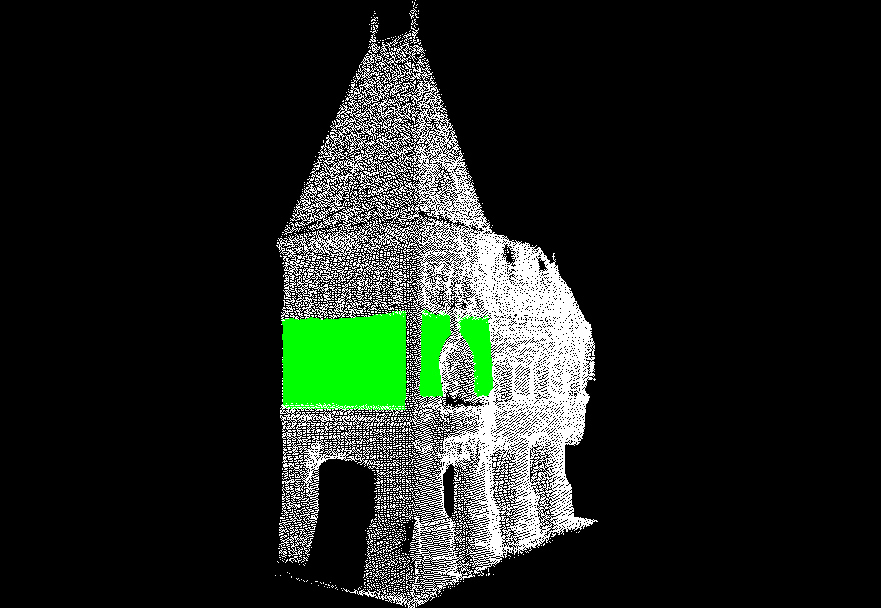

DescriptionOne of the most challenging issue in robotic perception applications is the fusion of information from several different sources. Today majority of the platforms include range (2D or 3D sonar/lidar) and camera (color/infrared, perspective/omni) sensors that are usually capturing independently the surrounding environment. Although the information from different sources can be used in a complementary way, in order to fuse the information for these independent devices it is highly desirable to have a calibration among them, i.e. to transform the measured data into a common coordinate frame. To achieve this goal either extrinsic or intrinsic-extrinsic calibration must be performed depending on whether the prior knowledge of the camera intrinsic parameters are available or not. In case of the extrinsic parameter estimation for a range-camera sensor pair the rigid movement between the two reference systems is determined. The case of extrinsic parameter estimation for 2D/3D lidar and a central camera (pesrpective or omnidirectional) has been applied especially for environment mapping applications, however this problem is far from being trivial. Due to the different ways of functionality of the lidar and camera, the calibration is often performed manually, or by considering special calibration targets on images (e.g. checkerboard patterns), or point feature extraction methods. These tend to be laborious and time consuming, especially if this calibration procedure has to be done more than once during data acquisition. In practice often it is desirable to have a flexible one step calibration for systems which are not necessary containing sensors fixed to a common platform. Other potential application fields that we have proposed solutions for include cultural heritage and the digital preservation of historic objects. For this topic a modified version of the region based pose estimation method was applied to work on smooth non-planar regions, and later on a full pipeline was also proposed for the task of 3D-2D data fusion on large scale data. ContributionIn this project, a novel region based framework is developed for calibrating 3D sensors with 2D central cameras. A spherical camera model is used that handles multiple types of omnidirectional and also perspective cameras in the same framework. Pose estimation is formulated as a 2D-3D nonlinear shape registration task which is solved without point correspondences or complex similarity metrics. It relies on a set of corresponding planar regions, and the pose parameters are obtained by solving an overdetermined system of nonlinear equations. Source Code

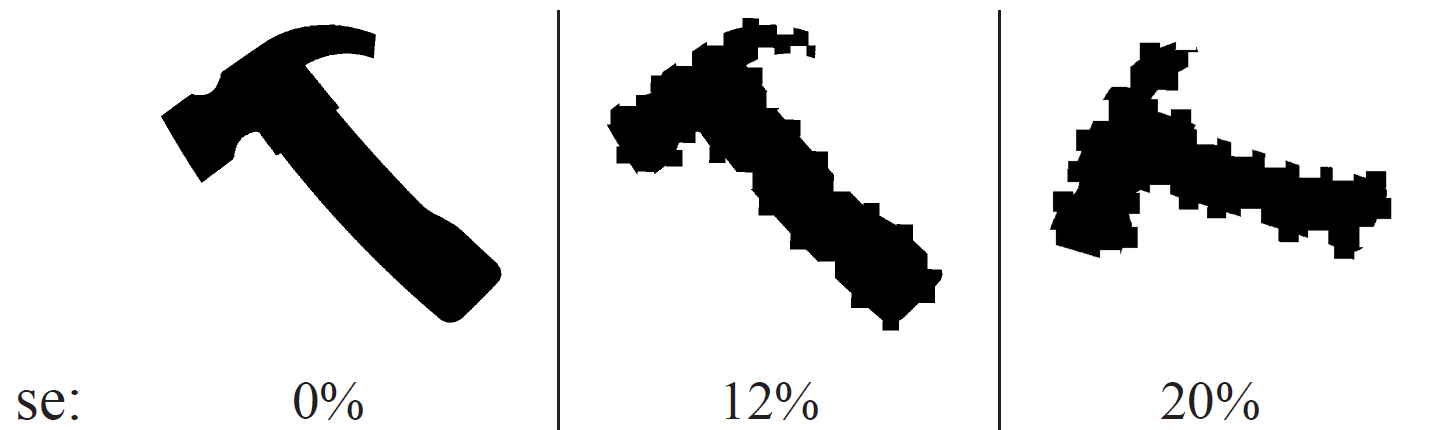

The demo implementation of the proposed algorithm can be found here. Synthetic tests, robustness to segmentation errorThe algorithm had been quantitatively evaluated on large synthetic datasets generated by real camera parameters, both for perspective and omnidirectional ones, containing one or more planar regions in a scene. Robustness against segmentation errors was also evaluated on simulated data, where we randomly added or removed squares uniformly around the boundary of a region up to the desired percentage of it's area. We found that our method is robust against segmentation errors of up to ≈ 12% if at least 3 non-coplanar regions are used.

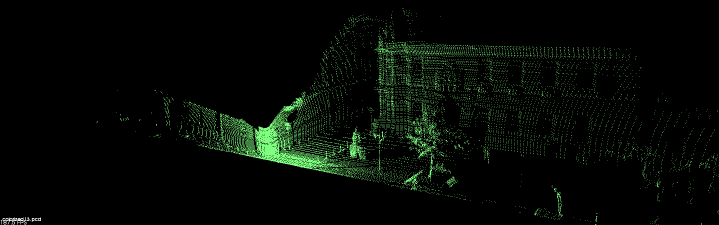

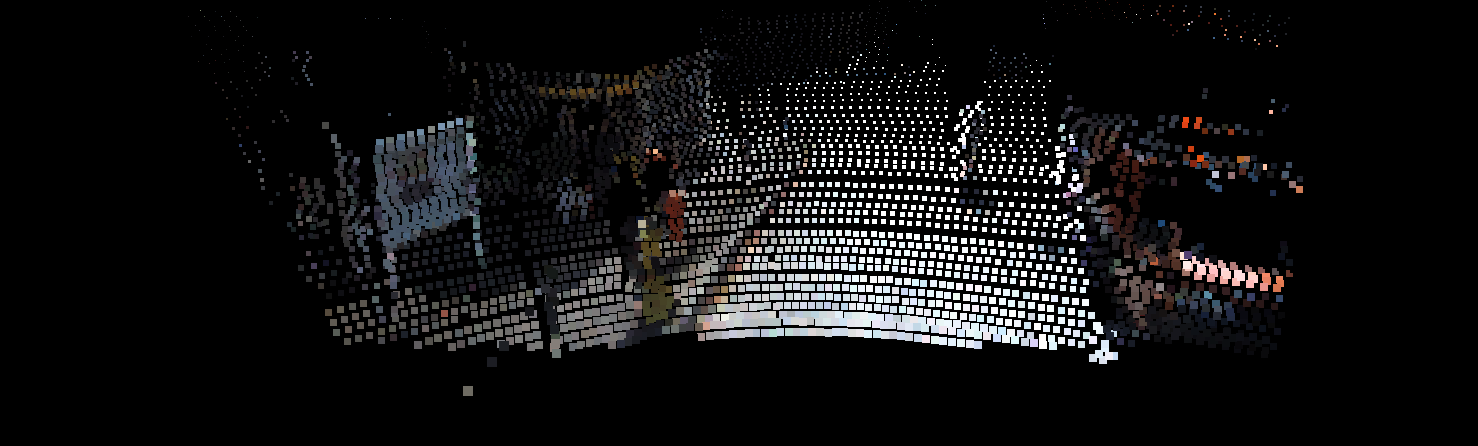

Calibration of a Lidar and two perspective cameras

Calibration of a Lidar and an omni (fish-eye) camera

Fusion results on small objects, and a large scale building

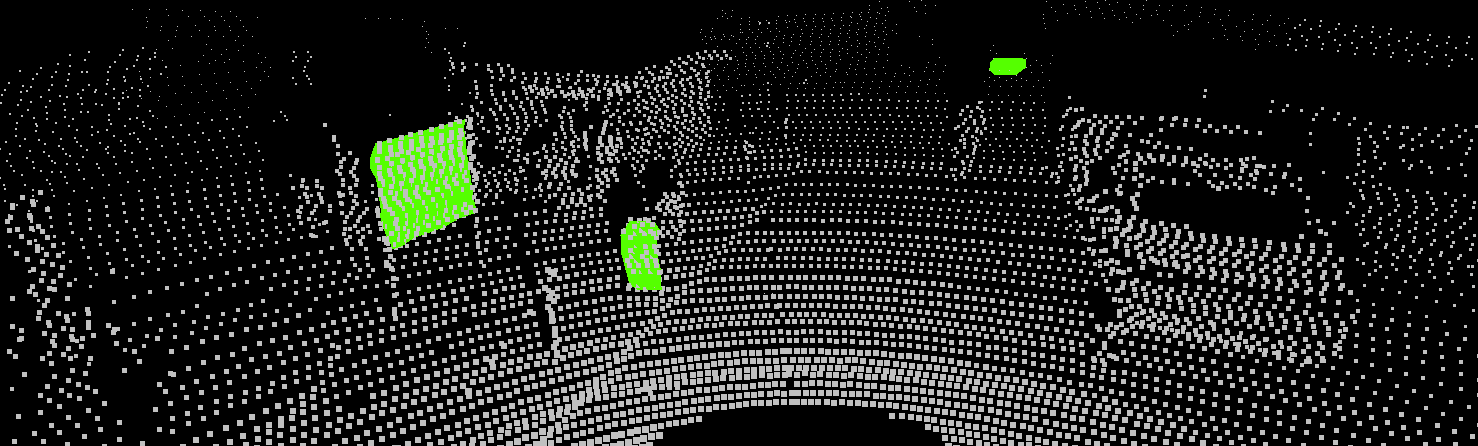

Evaluation on the KITTI datasetComparison with other camera pose estimation methods from the main literature could be performed only in a limited manner due to the fundamental differences of the proposed algorithm with respect to existing ones. For comparison, we used the mutual information based method described by Z. Taylor & J. Nieto in ACRA'12, working on 3D data with intensity and normal information. The algorithm was run on the same 2D-3D data pair both in the normal based and intensity based configurations as our method. Note that while the algorithm of Z. Taylor is able to use multiple separate 2D-3D data pairs (if a sequence of such data is available with a rigid Lidar-camera setup like the KITTI dataset) to optimize the results, for a fair comparison we only provided the same single image frame and point cloud pair as the one that the proposed method was tested on. The results of the proposed method proved to be comparable to the mutual information based method's. The registration result of the proposed method visually was accurate, and the CPU implementation runtime was two orders of magnitude smaller than the GPU implementation of the mutual information method.

|

- Robert Frohlich, Zoltan Kato, Alain Tremeau, Levente Tamás, Shadi Shabo, Yona Waksman, Region based fusion of 3D and 2D visual data for Cultural Heritage objects, In Proceedings of International Conference on Pattern Recognition, IEEE, Cancun, Mexico, pp. 2404-2409, 2016. [bibtex]

- Robert Frohlich, Stefan Gubo, Zoltan Kato, 3D-2D Data Fusion in Cultural Heritage Applications, In Proceedings of ICVGIP Workshop on Digital Heritage, Springer, Guwahati, India, 2016. [bibtex]

- Robert Frohlich, Stefan Gubo, Attila Lévai, Zoltan Kato, 3D-2D Data Fusion in Cultural Heritage Applications, Chapter in Heritage Preservation: A Computational Approach (Bhabatosh Chanda, Subhasis Chaudhuri, Santanu Chaudhury, eds.), Springer Singapore, pp. 111-130, 2018. [bibtex] [doi]

- Robert Frohlich, Levente Tamas, Zoltan Kato, Absolute Pose Estimation of Central Cameras Using Planar Regions, In IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 1-1, 2019. [bibtex] [doi]

- Levente Tamas, Robert Frohlich, Zoltan Kato, Relative Pose Estimation and Fusion of Omnidirectional and Lidar Cameras, In Proceedings of the ECCV Workshop on Computer Vision for Road Scene Understanding and Autonomous Driving (Lourdes de Agapito, Michael M. Bronstein, Carsten Rother, eds.), Springer, vol. 8926, Zurich, Switzerland, pp. 640-651, 2014. [bibtex]

- Levente Tamas, Zoltan Kato, Targetless Calibration of a Lidar - Perspective Camera Pair, In Proceedings of ICCV Workshop on Big Data in 3D Computer Vision, IEEE, Sydney, Australia, pp. 668-675, 2013. [bibtex]

Hichem Abdellali has been awarded the Doctor of Philosophy (PhD.) degree...

2022-04-30

Hichem Abdellali has been awarded the KÉPAF Kuba Attila prize...

2021-06-24