Research Group on Visual Computation

- Home

- Projects

- TODER Project

- Demo Software

- Open positions

- COSCH Training School 2015

- Publications

- Intranet

Evaluation of Point Matching Methods for Wide-baseline Stereo Correspondence on Mobile Platforms | |

|

|

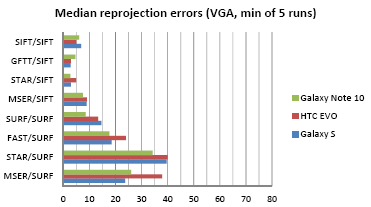

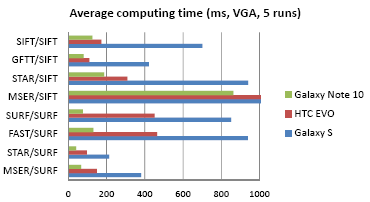

DescriptionCollaborative generation of virtual views is heavily based on the data connection and communication of mobile devices within a local neighbourhood, processing and analysing the large amount of pictorial and other sensorial data (e.g., location, orientation). We define different task, that have the common goal of collaborative virtual view generation and revealing their practial usabilities. By utilizing these approaches such new information contents can be generated and shared that can help people make decisions and would not be possible without collaboration of mobile imaging devices. These can be used to e.g., survey event venues, accidents or places after natural disaster. The main advantage of such an approach is that near real-time, actual status can be imaged contrary to the StreetView-like applications that provide rather old data. The main approaches include collaborative 3D reconstruction, collaborative panoramic image generation and collaborative synthetic view generation. A virtual view can be a reconstructed 3D model from normal or stereo images. The users will be able to observe objects, locations from different viewpoints. Even instructions could be sent to users where to move, which direction to turn to take new images that can further improve the reconstruction result. A wider angle panoramic image can be generated from typically narrow angle mobile images. The images should have overlaps and can come from the same device (taking images or video when moving the device) or from other devices. Normal or 3D reconstructed images of different devices can be used to generate synthetic (e.g., aerial-like) views that can be used to give an overview map in traffic jams or events with large number of participants. Such an approach can be useful for detecting important events that affect large number of people and help e.g., organizing rescue plans and/or to provide faster and more precise information to them. Wide-baseline stereo matching is a common problem of computer vision. By the explosion of smartphones equipped with camera modules, many classical computer vision solutions have been adapted to such platforms. Considering the widespread use of various networking options for mobile phones, one can consider a set of smart phones as an ad-hoc camera network, where each camera is equipped with a more and more powerful computing engine in addition to a limited bandwidth communication with other devices. Therefore the performance of classical vision algorithms in a collaborative mobile environment is of particular interest. In such a scenario we expect that the images are taken almost simultaneously but from different viewpoints, implying that the camera poses are significantly different but lighting conditions are the same. We carried out quantitative comparison of the most important keypoint detectors and descriptors in the context of wide baseline stereo matching. We found that for resolution of 2 megapixels images the current mobile hardware is capable of providing results efficiently.

|

- Endre Juhasz, Attila Tanacs, Zoltan Kato, Evaluation of Point Matching Methods for Wide-baseline Stereo Correspondence on Mobile Platforms, In Proceedings of International Symposium on Image and Signal Processing and Analysis (Giovanni Ramponi, Sven Loncaric, Alberto Carini, Karen Egiazarian, eds.), Trieste, Italy, pp. 806-811, 2013. [bibtex]

Hichem Abdellali has been awarded the Doctor of Philosophy (PhD.) degree...

2022-04-30

Hichem Abdellali has been awarded the KÉPAF Kuba Attila prize...

2021-06-24