You are here

Calibration of Omnidirectional, Perspective and Lidar Camera Systems

Faculty of Automation and Computer Science, Tehnical University of Cluj Napoca, Romania (Levente Tamas)

One of the most challenging issue in robotic perception applications is the fusion of information from several different sources. Today majority of the platforms include range (2D or 3D sonar/lidar) and camera (color/infrared, perspective/omni) sensors that are usually capturing independently the surrounding environment, although the information from different sources can be used in a complementary way. In order to fuse the information for these independent devices it is highly desirable to have a calibration among them, i.e. to transform the measured data into a common coordinate frame. To achieve this goal either extrinsic or intrinsic-extrinsic calibration must be performed depending on whether the prior knowledge of the camera intrinsic parameters are available or not. In case of the extrinsic parameter estimation for a range-camera sensor pair the rigid movement between the two reference systems is determined.

The case of extrinsic parameter estimation for 2D/3D lidar and perspective camera has been done especially for environment mapping applications, however this problem is far from being trivial. Due to the different ways of functionality of the lidar and camera, the calibration is often performed manually, or by considering special calibration targets on images (e.g. checkerboard patterns), or point feature extraction methods. These tend to be laborious and time consuming, especially if this calibration procedure has to be done more than once during data acquisition. In practice often it is desirable to have a flexible one step calibration for systems which are not necessary containing sensors fixed to a common platform.

In this project, a novel region based framework is developed for 2D and 3D camera calibration.

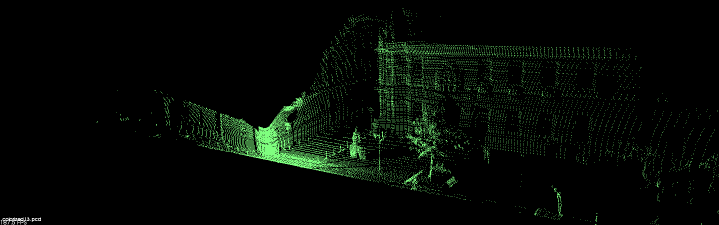

Calibration of a Lidar and two perspective cameras

Input Lidar range image |

|

First perspective camera image |

Second perspective camera image |

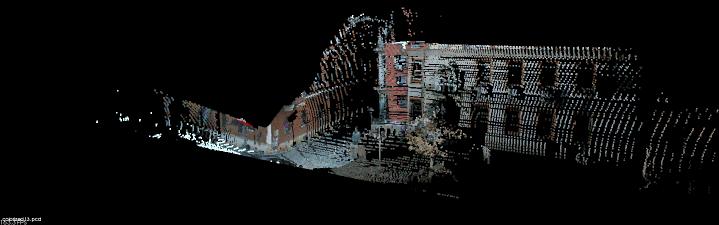

Perspective camera images projected onto the Lidar 3D point cloud after calibration |

|